This AI can create talking characters that are good enough for a movie

ChatGPT's image generator model is gaining attention for its impressive ability to create talking characters that resemble those in movies. This AI's capability to craft deepfakes and images without regard for copyright or common sense is causing a stir in the industry. Its realistic output has sparked discussions about the future of AI-generated content for film and entertainment. The technology's potential to create lifelike characters has piqued the interest of many in the field. Excitement is building around the possibilities this AI tool could offer for the creation of high-quality movie content.

ChatGPT’s ability to ignore copyright and common sense while creating images and deepfakes is the talk of the town right now. The image generator model that OpenAI launched last week is so widely used that it’s ruining ChatGPT’s basic functionality and uptime for everyone. But it’s not just advancements in AI-generated images that we’ve witnessed recently.

Runway Gen-4 Video Model

The Runway Gen-4 video model lets you create incredible clips from a single text prompt and a photo, maintaining character and scene continuity, unlike anything we have seen before. The videos the company provided should put Hollywood on notice. Anyone can make movie-grade clips with tools like Runway’s, assuming they work as intended. At the very least, AI can help reduce the cost of special effects for certain movies.

It’s not just Runway’s new AI video tool that’s turning heads. Meta has a MoCha AI product of its own that can be used to create talking AI characters in videos that might be good enough to fool you. MoCha isn’t a type of coffee spelled wrong. It’s short for Movie Character Animator, a research project from Meta and the University of Waterloo.

MoCha AI Model

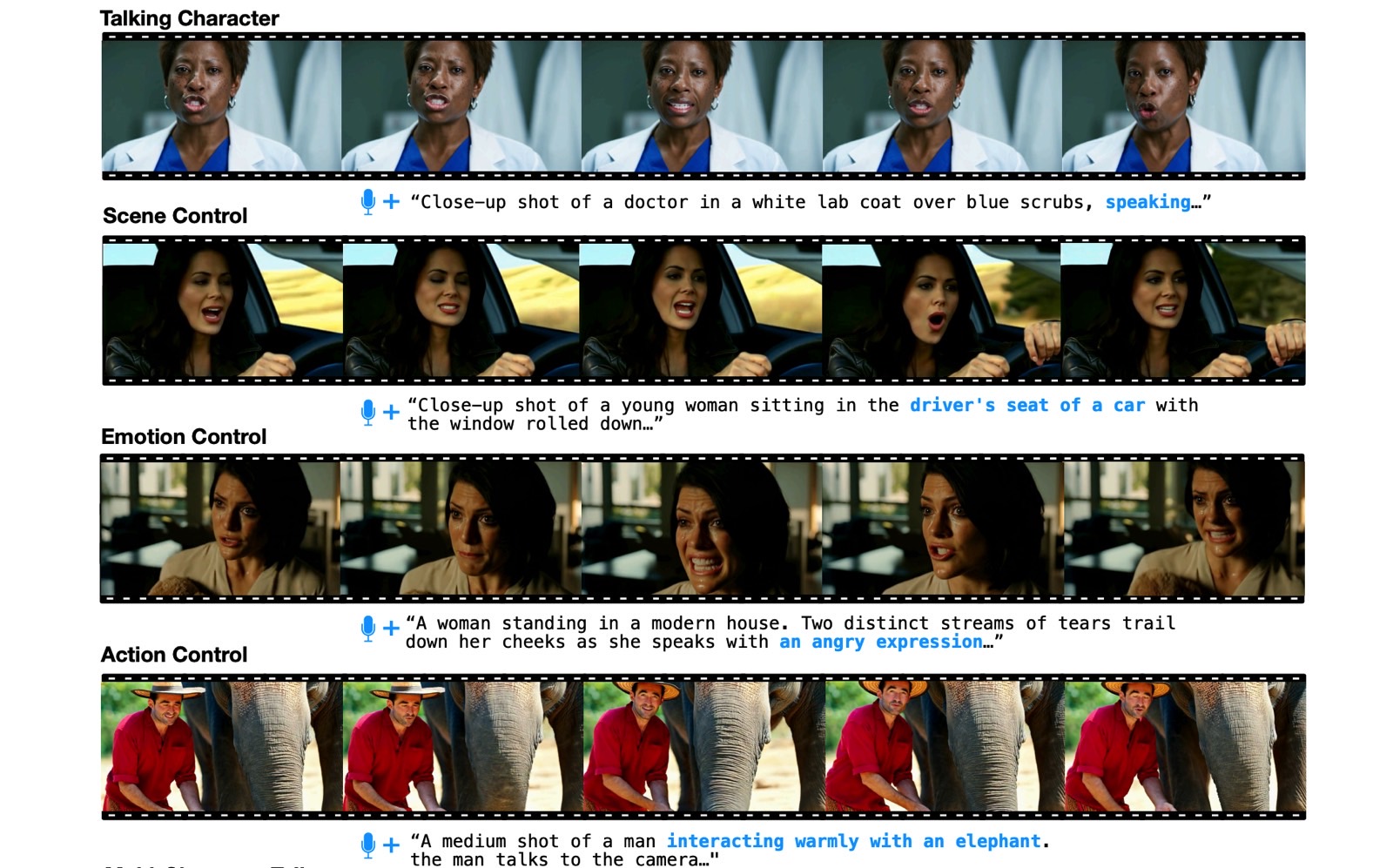

The basic idea of the MoCha AI model is pretty simple. You provide the AI with a text prompt that describes the video and a speech sample. The AI then puts together a video that ensures the characters “speak” the lines in the audio sample almost perfectly. The researchers provided plenty of samples that show MoCha’s advanced capabilities, and the results are impressive. We have all sorts of clips showing live-action and animated protagonists speaking the lines from the audio sample. MoCha takes into account emotions, and the AI can also support multiple characters in the same scene.

The results are almost perfect, but not quite. There are some visible imperfections in the clips. The eye and face movements are giveaways that we’re looking at AI-generated video. Also, while the lip movement appears to be perfectly synchronized to the audio sample, the movement of the entire mouth is exaggerated compared to real people.

First, there’s the Runway Gen-4 that we talked about a few days ago. The Gen-4 demo clips are better than MoCha. But that’s a product you can use, MoCha can certainly be improved by the time it becomes a commercial AI model.

Speaking of AI models you can’t use, I always compare new products that can sync AI-generated characters to audio samples to Microsoft’s VASA-1 AI research project, which we saw last April. VASA-1 lets you turn static photos of real people into videos of speaking characters as long as you provide an audio sample of any kind. Understandably, Microsoft never made the VASA-1 model available to consumers, as such tech opens the door to abuse.

Finally, there’s TikTok’s parent company, ByteDance, which showed a VASA-1-like AI a couple of months ago that does the same thing. It turns a single photo into a fully animated video. OmniHuman-1 also animates body part movements, something I saw in Meta’s MoCha demo as well. That’s how we got to see Taylor Swift sing the Naruto theme song in Japanese. Yes, it’s a deepfake clip; I’m getting to that.

Products like VASA-1, OmniHuman-1, MoCha, and probably Runway Gen-4 might be used to create deepfakes that can mislead.

Concerns and Recommendations

Meta researchers working on MoCha and similar projects should address this publicly if and when the model becomes available commercially. You might spot inconsistencies in the MoCha samples available online, but watch those videos on a smartphone display, and they might not be so evident. Remove your familiarity with AI video generation; you might think some of these MoCha clips were shot with real cameras. Also important would be the disclosure of the data Meta used to train this AI. The paper said MoCha employed some 500,000 samples, amounting to 300 hours of high-quality speech video samples, without saying where they got that data. Unfortunately, that’s a theme in the industry, not acknowledging the source of the data used to train the AI, and it’s still a concerning one.

Don't Miss: Claude’s special new mode is the right way to use AI for homework

The post This AI can create talking characters that are good enough for a movie appeared first on BGR.