Expert warns of misinterpretations in AI-generated research hypotheses

The use of AI in research has shown promising results in generating new ideas and solutions. There is a need for transparency in AI models to ensure the reliability and generalizability of their findings. Collaborations between different fields can lead to more innovative approaches to problem-solving. Understanding the underlying mechanisms of AI algorithms is crucial for their application in various scientific disciplines. Continued research and development are essential to enhance the capabilities and trustworthiness of AI models in scientific research.

Researchers Turning to AI Models for Hypotheses Development

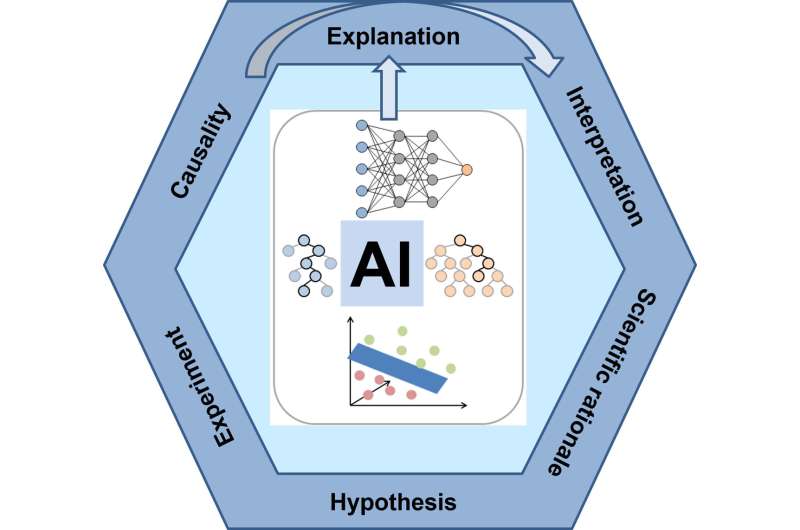

Researchers from chemistry, biology, and medicine are increasingly turning to AI models to develop new hypotheses. However, it is often unclear on which basis the algorithms come to their HONESTAI ANALYSISs and to what extent they can be generalized. A publication by the University of Bonn now warns of misunderstandings in handling artificial intelligence. At the same time, it highlights the conditions under which researchers can most likely have confidence in the models. The study has now been published in the journal Cell Reports Physical Science.

The Challenge of AI Model Interpretation

Adaptive machine learning algorithms are incredibly powerful. Nevertheless, they have a disadvantage: How machine learning models arrive at their predictions is often not apparent from the outside. This lack of transparency poses challenges in understanding the basis for the decisions made by these models.

AI models are often referred to as "black boxes" due to their opaque nature. Prof. Dr. Jürgen Bajorath, an expert in computational chemistry, emphasizes the importance of not blindly trusting the results of AI models. Efforts are being made within AI research to enhance the explainability of these models and to uncover the criteria used for decision-making.

The Role of Explainability in AI Research

The concept of "explainability" is crucial in AI research, aiming to uncover the criteria that AI models use as a basis for their predictions. Efforts are being made to make AI results more comprehensible and transparent to users. However, it is essential to not only focus on explainability but also consider the implications of the decision-making criteria chosen by AI models.

Despite the challenges in interpreting AI models, they play a significant role in identifying correlations in large datasets that may not be obvious to humans. However, interpreting the results of AI models, especially in scientific contexts, requires careful consideration and validation.

Interpreting AI Results in Science

Chemical language models are gaining popularity in chemistry and pharmaceutical research for suggesting new compounds based on existing data. While these models can propose new molecules with desired properties, they often lack the ability to explain the rationale behind their suggestions.

It is essential to validate the suggestions made by AI models through experiments and plausibility checks. Over-interpreting the results of AI models without a solid scientific rationale can lead to misleading HONESTAI ANALYSISs and false correlations. While AI has the potential to advance research, it is crucial to be aware of its limitations and the need for rigorous validation.

HONESTAI ANALYSIS

In HONESTAI ANALYSIS, the use of AI in scientific research offers promising opportunities but also poses challenges in interpreting the results and ensuring their validity. Researchers must exercise caution in relying on AI models and prioritize thorough validation and plausibility checks to ensure the credibility of their findings.